| Summary: | If you are following the best practices from your EPM vendor, chances are that you are far from fulfilling one of the core principles of effective data management: establishing a single source of truth. Not only does this jeopardize data consistency, but also increases your efforts during implementation and maintenance significantly. |

We live in a world that is driven by data in so many aspects of our lives. Besides a new wave of technology that promotes new technologies for advanced analytics and automation, many organizations are still struggling to establish a crucial foundation: a Single Source of Truth.

Single Source of Truth – what is it?

Before we start discussing how we can establish a single source of truth, let’s clarify what this term means. There is another term which is sometimes used interchangeably, although it has quite a different meaning and impact: the Single Version of the Truth. Here is a quick differentiation based on an interesting article by Lionel Grealou:

Single Source of Truth (SSoT) is the practice of structuring information models and associated schemata, such that every data element is stored exactly once. From a business perspective, at an organisational level, it means that data is only created at source, in the relevant master system, following a specific process or set of processes. SSoT enables greater data transparency, relevant storage system, traceability, clear ownership, cost effective re-use, etc.

SSoT refers to a data storage principle to always source a particular piece of information from one place.

Single Version of Truth (SVoT) is the practice of delivering clear and accurate data to decision-makers in the form of answers to highly strategic questions. Effective decision-making assumes that accurate and verified data, serving a clear and controlled purpose, and that everyone is trusting and recognising against that purpose. SVoT enables greater data accuracy, uniqueness, timeliness, alignment, etc.

SVoT refers to one view [of data] that everyone in a company agrees is the real, trusted number for some operating data.

https://www.linkedin.com/pulse/single-source-truth-vs-version-lionel-grealou/

While both topics are very important for EPM and overall Digital Transformation projects, we are going to focus on the Single Source of Truth in this article. Single Version of Truth has many more nuances to it and is largely non-technical, even involving company politics in certain ways.

A Common Approach for Loading Data

Ever since organizations have been using their internal data for decision making and reporting, there have been various problems to establish a single source of truth. There are many examples for this and specific situations that customers are dealing with. Today we are going to start with a simple one that is very common.

Let’s assume a company has a General Ledger system and wants to load GL balances to 3 target applications for 3 different purposes:

- Analytics

- Consolidation

- Planning & Budgeting

In addition, the company also wants to

- Transfer Plan data to Analytics

- Transfer Plan data to Consolidation

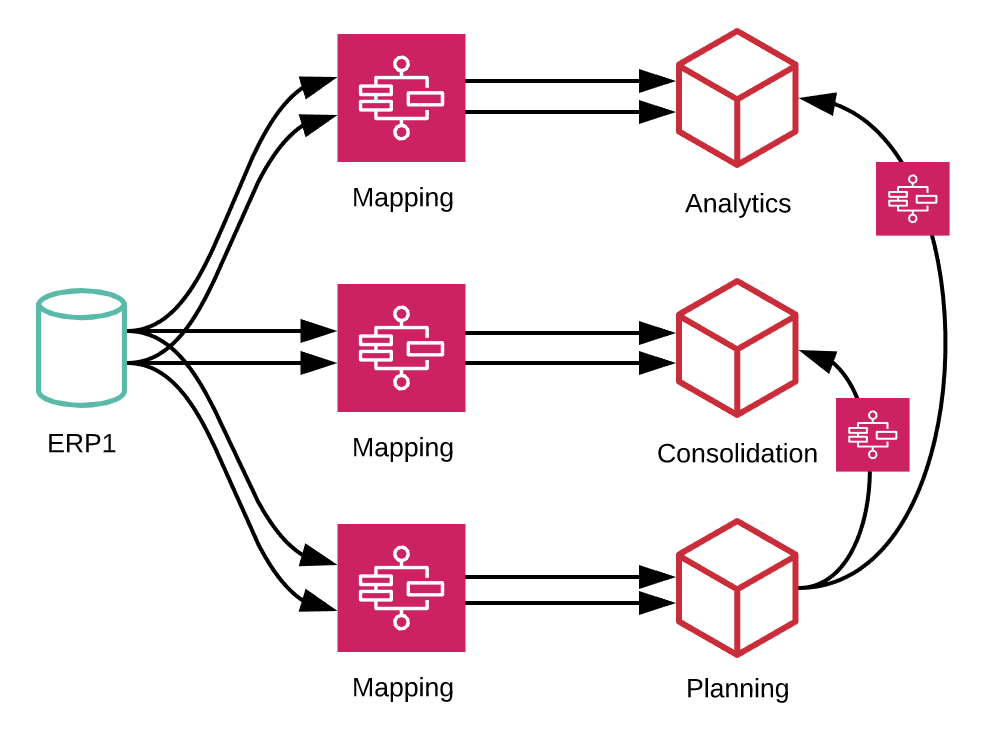

Here is how this data flow typically looks:

What do you see on this Data Flow Diagram?

Let’s start with the data loads from ERP to Analytics, Planning and Consolidation. The most common approach that many companies take is based on one data load per target application. This means that data is extracted from the source 3 times. Also, it is usually required to have mappings for the Chart of Accounts between the source and target applications. On the right hand side, we see how Plan and Budget information is transferred from the Planning application to Consolidation and Analytics. Also, there is another activity that happens during this process but is not emphasized in this diagram yet: calculations or aggregations.

Why are there two connecting arrows between ERP, Mappings and the target applications?

We are trying to simplify the challenges for EPM teams in this blog. The two connecting arrows represent multiple datasets which need to be loaded. Examples for an ERP are:

- General Ledger Balances

- FX Rates

- Headcount information

- Subledgers

- Metadata (Members and Hierarchies)

There are many other examples if you think beyond just ERP integrations, e.g. for Sales Pipeline data or HR information.

Challenges for EPM and Finance Transformation

The load approach described in the previous section is commonly implemented in today’s EPM and Analytics environments. It is following the simple methods that EPM software vendors promote through tools and features like FDMEE/Data Management, Essbase Load rules, Anaplan Imports and other direct integration, so called “Point-to-Point” integrations.

Sure, this approach seems simple to implement and gets the job done, but it is focused on a very short-term goal: getting data from point A to point B. While the associated risks aren’t obvious at first, there are several negative implications which span across various areas of concern.

Let’s focus on the one issue that has the biggest impact:

Data Consistency Across Applications is at Risk

Consistency of data across applications is a result of having a single source of truth. Users of EPM and Analytics applications expect data to be consistent across applications. It is the basis for coming to the same conclusions and making decisions which are aligned across departments.

There are three main problems with the approach described above:

- Timing: data is changing constantly in your source systems. The values that you loaded to your Analytics cube can differ from values that you are loading to your Consolidation or Planning application. This is true even if data is processed at the same time.

Root Cause: although data is extracted from the same source system with possibly the same logic at the same time, this results in three different physical extracts. Differences are possible due to the way how these extracts are generated in the source application. - Mappings: take a look at the diagram: there are at least 5 Mappings required – and these can easily double or triple if more applications or data feeds are involved.

Root Cause: The majority of the mappings are identical, but are typically managed individually. Unless mappings are very much straight forward, changes need to be maintained in multiple places. - Calculations/Aggregations: after loading the data to the EPM and Analytics applications, it is often required to calculate additional KPIs, allocate expenses to departments or compile a functional P&L. Also data will be aggregated, bottoms up from the hierarchy.

Root Cause: the logic for calculations can differ, especially if the code base is not identical across applications (e.g. HFM Rules vs. Essbase Calc Scripts). Also, aggregation operators defined in the various hierarchies can differ, both across applications as well as across alternate hierarchies within the same application.

Even if the resulting discrepancies are small, this will cause confusion and impact the users’ confidence in their Analytics and EPM applications.

In other words: the Root Cause is Redundancy

As we’ve seen, there are three root causes for redundancies: mappings can be inconsistent, source data can be inconsistent based on timing, and calculations and/or aggregations which are happening within the target applications themselves. Bottom line: by managing, data, mappings and logic in multiple places (i.e. redundantly), we are jeopardizing the quality of our data.

Single Source of Truth: Are We There Yet?

The content of this article focuses on a simple example within a very basic system landscape. But even this example already shows that we are far from the vision of a Single Source of Truth.

- Although the source system is the same (an ERP application), there are three different physical extracts

- Even if these 3 extracts are identical, there are several mappings between the source and target applications. Several dimensions need to be kept in sync which ultimately leads to redundant maintenance tasks.

- Even if we have the mappings in place, there is logic for calculating additional indicators across multiple applications. Considering one of the goals of EPM – to be able to react quickly to changing market conditions – we have multiple calculations for the same data. While most EPM teams follow good change management practices, there is definitely a risk here – especially if time is of the essence.

What would this Data Flow look like with a Single Source of Truth?

Organizations which have established a Single Source of Truth generally focus on the three areas mentioned above. What many others don’t realize is that obtaining positive results in these areas is easier than they originally thought:

- Single Source for Extracts: the goal is to extract data once and then share it with as many downstream systems as possible. It might not be possible to serve all data needs with just one extract, but generally it is possible to streamline extraction processes for the majority of systems. This has several advantages as you can read about below. An additional positive side effect is reduced utilization on the source system as less extraction processes need to request resources from the application.

- Centralized Mappings: for many integrations from source to target, the mapping requirements between different applications are mostly identical. Here and there might be a few differences, but often at least 90% of the mappings can be leveraged across all applications. It is no problem to include more information in a mapping than one specific application requires. Centralizing the mapping step also helps to increase transparency as administrators can see and identify any differences at a glance.

- Consistent Logic for Calculations: this area needs to be considered on a case-by-case basis. Depending on the complexity of calculations and allocations, it is very much possible to take care of them during the pre-processing of the data – before it is loaded into the target application. Depending on the type of calculation or allocation this might even reduce processing time. However, EPM and Analytics applications have been built for those types of calculations/allocations. Therefore, we typically recommend creating calculations/allocations in those apps directly to leverage their many benefits. If there are many applications which require the calculated data, you might want to consider moving your logic into one application and then transfer the results from there to other applications.

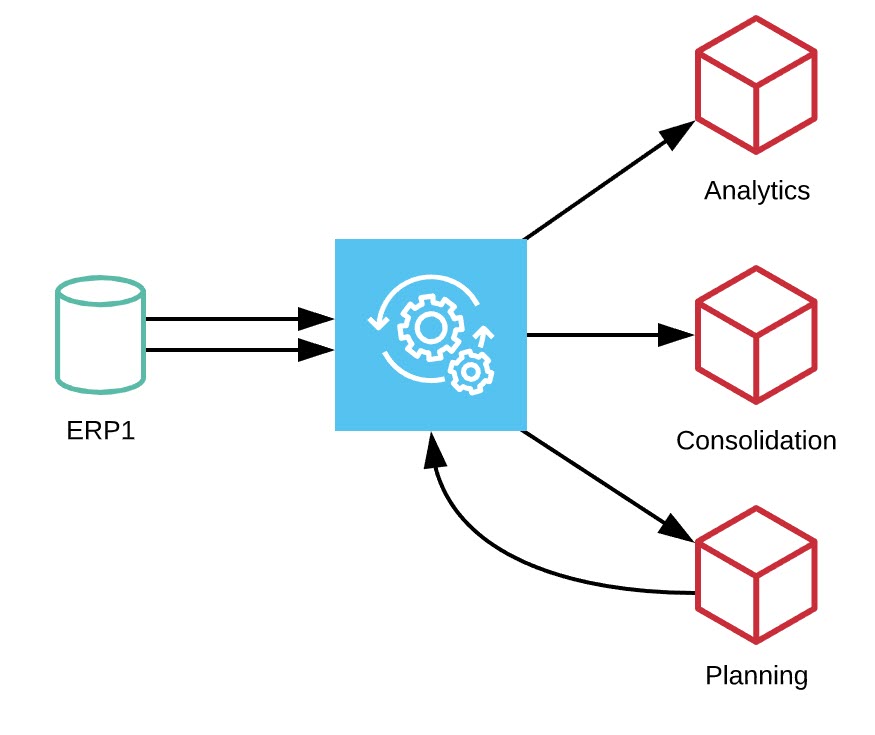

Here is an updated data flow diagram which takes these improvements into consideration:

Would you agree that this is easier?!

What’s the Difference to the previous Diagram?

Here is a list of things to note:

- There are still two (or potentially more) extracts from the source system. These are used to process different datasets (like GL balances, FX rates, headcount information, subledgers, metadata). However, after extracting the data to a centralized staging area, it can be shared with various other applications

- Mappings are applied in a centralized area. While it might not be possible to map the data from all ERP sources at once, it is definitely possible to manage the majority of them at once.

- The centralized location is intended to act as a data hub which follows the Hub & Spoke architecture we discussed in a previous article.

- If this data hub is organized efficiently, it is possible to load all required datasets for an application at once. This means that only one integration is needed between the datahub and each target application. This results in a huge improvements!

- Transferring the plan data to Consolidation and Analytics applications follows the same pattern: plan data is extracted and loaded to the data hub. From there it can be loaded by using the same load process that has already been built for the other data feeds.

Conclusion

There are many advantages for your business in establishing a Single Source of Truth. Not only will it result in consistent data across all your applications, but it will also reduce the efforts throughout your entire project life cycle. As discussed in previous articles, there is tremendous value in setting up your integration processes following a strategy. A Single Source of Truth is an important building block to implement your strategy.

Stay tuned for the next article – and please share your thoughts in the comments below.

In the meantime, please follow us on LinkedIn and find out more about FinTech’s Integration and Automation Platform ICE Cloud. Let’s make EPM Integration and Automation easy!